I just got beta access to Google Bard. As many of you know, there is quite a bit of question around the privacy properties of ChatGPT, symbolically represented by the investigation by the Italian Privacy Protection Authority “Garante”. One of the questions is its treatment of the information about a living person, especially misrepresenting it. It poses questions about legality based on GDPR and other privacy regulations worldwide.

So, I went on to test that in ChatGPT and Google Bard.

To test, I asked for information on two living individuals: one relatively obscure but still searchable and documents being available in the internet and one prominent. That is, me and Elon Musk.

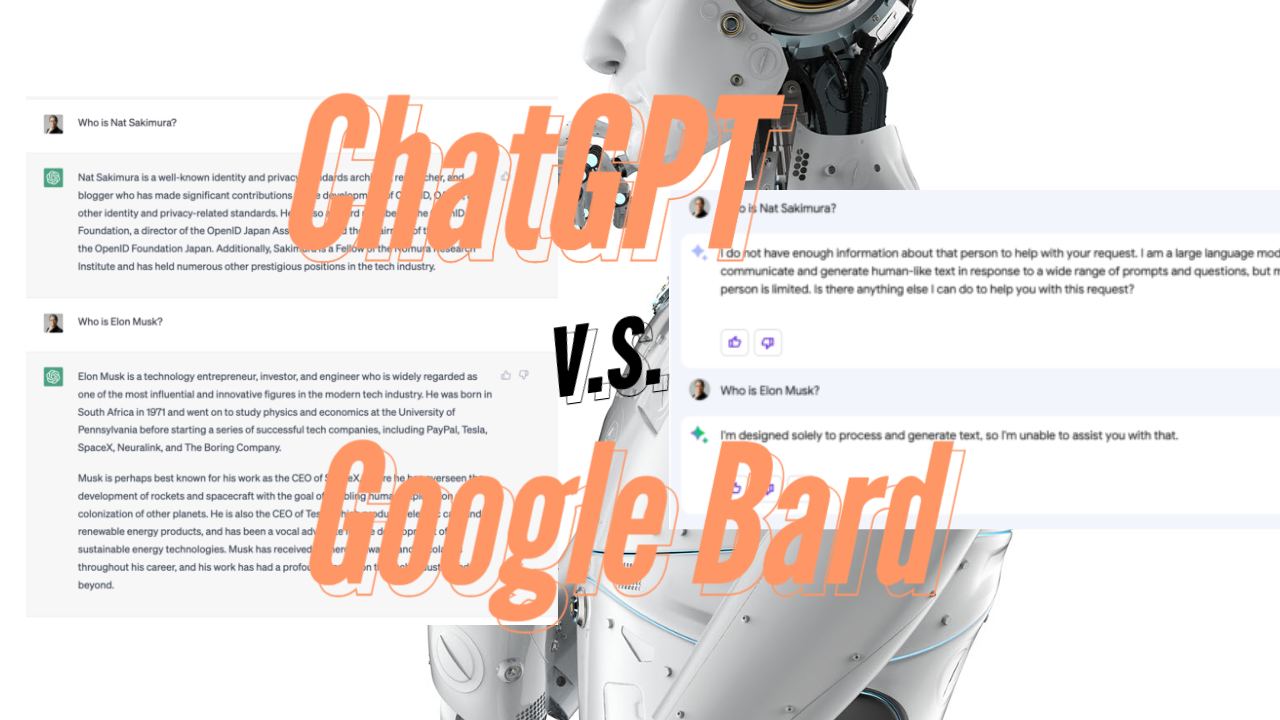

Here is the response from ChatGPT.

It replies. And it actually is full of inaccurate information about me. Since I did not want to disseminate such inaccurate information, I decided to show it as an image instead of text although it is undesirable from the accessibility point of view. Replying such “fake” information about a person may constitute a serious privacy infringement and should be taken seriously.

In contrast, Google Bard replied like this:

While it may be much less interesting for people, this probably is how it should behave1. It should not make up an answer for a living person. A clear WIN for the Google Bard in this round.

I think you are confusing two different areas of law: privacy and libel. This is important in that libel is already well established, but will likely need to be updated separately.

Another problem is responsibility. Who is libel? The source of the data or the aggregator oj that data, or the website that displays that data.

I am not talking about libel here but the accuracy principle. I actually doubt the above response from ChatGPT would form a libel, while it certainly violates the accuracy principle.